In the ever-changing software development landscape, two technologies have emerged as critical to determining how we deploy and manage applications: serverless computing and containers. Both models have significant advantages and are increasingly used by developers and businesses looking to improve their workflows and embrace cloud native approaches. However, with fundamental differences between the two, selecting the appropriate technology for your project might be challenging.

This article will help you grasp the core ideas, benefits, and important differences between serverless and containers. Whether you’re a seasoned developer or new to cloud computing, you’ll acquire vital insights into which solution best meets your needs, ensuring your projects are successful and scalable.

What is Serverless Computing?

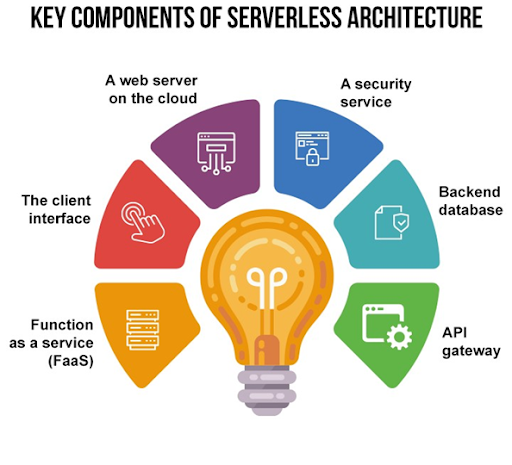

Serverless computing, a seemingly contradictory concept, is a novel cloud run approach that allows developers to construct and execute apps without managing servers. Major cloud providers dynamically allocate machine resources, including Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure. This approach prioritizes what developers do best—writing code—while delegating infrastructure management to the cloud provider.

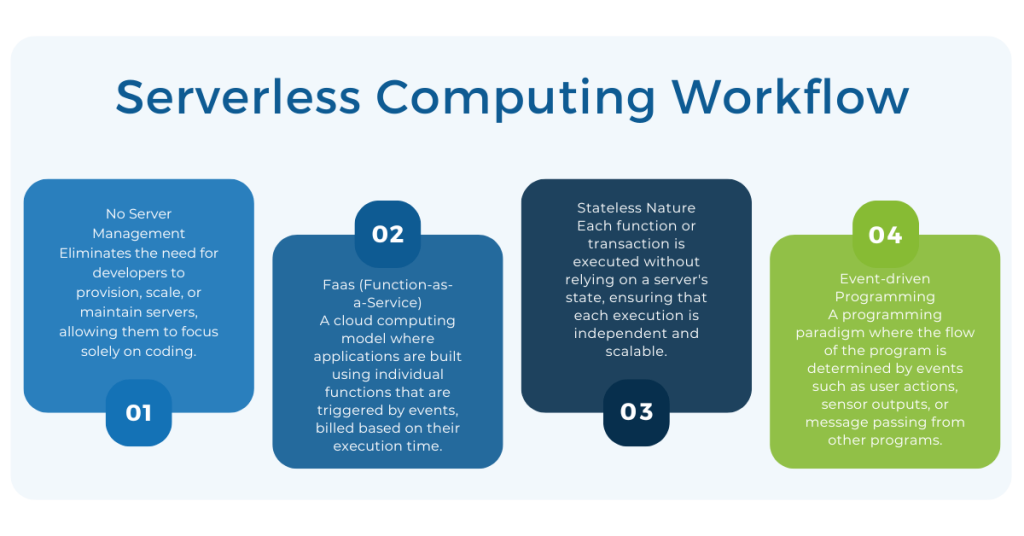

Serverless computing is fundamentally based on the Function-as-a-Service (FaaS) architecture, which divides applications into discrete functions that run in response to events. This event-driven approach and the stateless nature of serverless services enable exceptional scalability and flexibility. Applications automatically grow to meet demand, from a few requests per day to thousands per second, with no human intervention.

Advantages of Serverless Computing

- Reduced Operational Overhead: There is no need to provision, scale, or manage servers. These tasks are handled by the cloud provider, freeing up developers’ time to code.

- Scalability: Serverless applications may scale dynamically based on demand, making them excellent for workloads with fluctuating traffic.

- Cost-effectiveness: Because you only pay for the compute time you need, serverless is an affordable solution for many projects, particularly those with erratic traffic patterns.

Serverless computing excels when application demand is unpredictable, tasks are short-lived, and rapid deployment is critical. However, this is not a one-size-fits-all answer. The stateless nature and cold start periods may not be suitable for all applications, especially those that require permanent connections or low-latency responses.

What are Containers?

Containers are an innovative idea in software development that bundles an application and its dependencies into a single, portable package. This encapsulation allows an application to function flawlessly across several computing environments—from a developer’s laptop to a test environment, from a staging environment to production, and across on-premise and cloud platforms.

How Containers Work

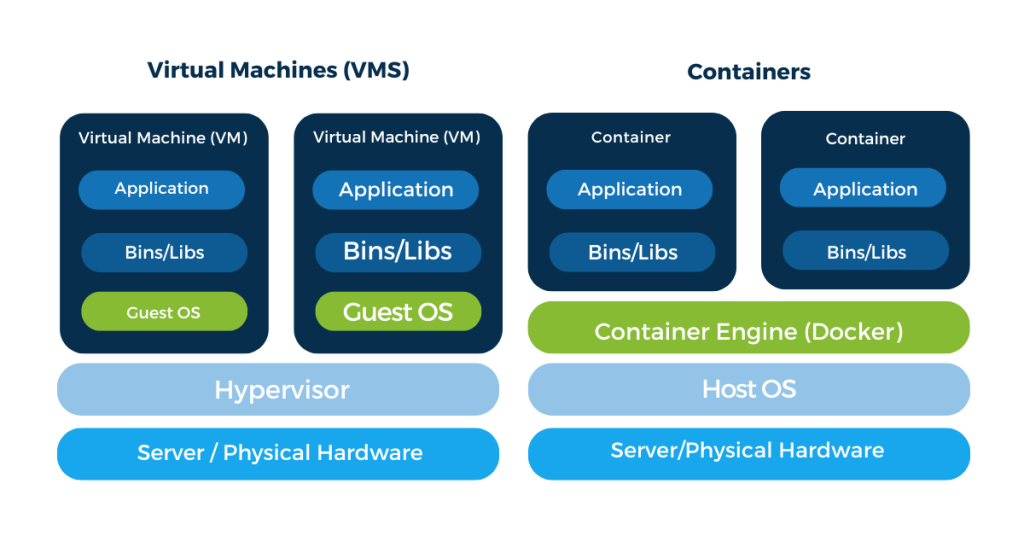

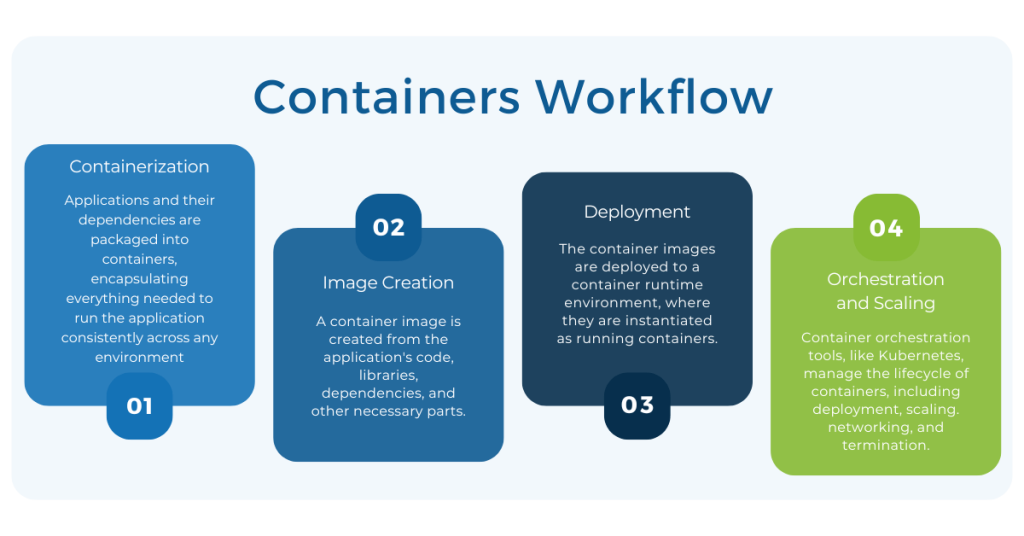

Containers work on the notion of containerization, which is a lightweight kind of virtualization. Unlike traditional virtual machines, which require their own operating systems, containers share the host system’s kernel while enclosing the application’s code, runtime, system tools, libraries, and settings. This isolation ensures the application performs uniformly and reliably regardless of where it is deployed.

Container orchestration technologies, such as Kubernetes and Docker Swarm, have become vital for managing the container lifecycle, which includes deployment, scaling, networking, and load balancing. This orchestration enables developers and IT operations teams to automate and simplify container administration at scale.

Advantages of Using Containers

- Containers create a consistent environment for programs from development to production, reducing the “it works on my machine” syndrome.

- Containers are more resource-efficient than typical virtual machines since they share the host system’s operating system kernel, as well as, when possible, binaries and libraries.

- Containers may be spun up in seconds, allowing for rapid scalability and the ability to roll out updates or rollback to earlier versions as needed.

Containers are especially well-suited to microservices architectures, which divide programs into smaller, independently deployable services. They provide a great runtime environment for applications requiring isolation, predictability, and scalability, making them popular among developers seeking to launch complex, scalable applications.

Key Differences Between Serverless and Containers

Serverless computing and containers strive to ease application deployment and management, but they address different needs and circumstances. Here’s a rundown of the main distinctions between the two.

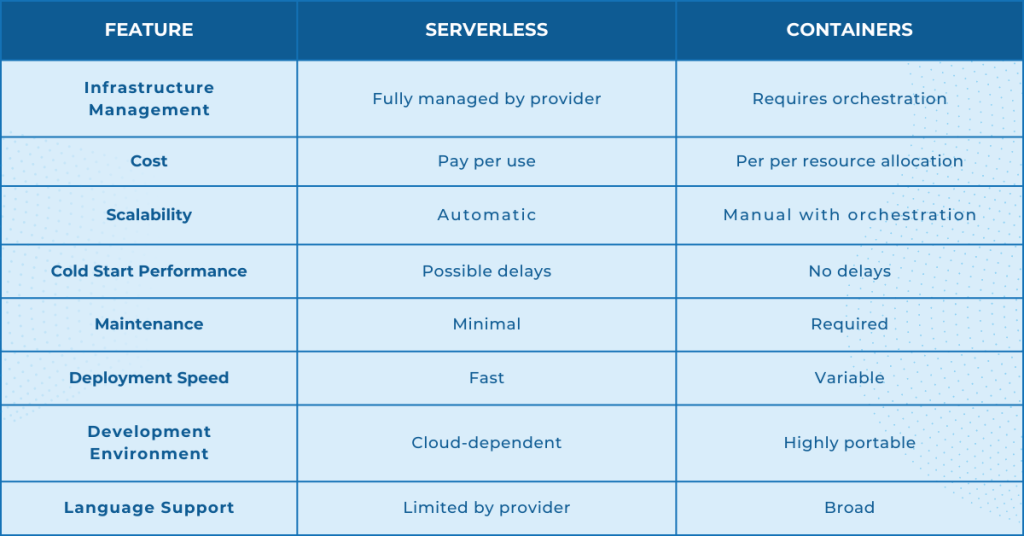

- Infrastructure Management: Serverless completely isolates the infrastructure, whereas containers provide additional control but necessitate management of the container orchestration platform.

- Cost and Billing Model: Serverless charges for the resources spent by functions, whereas containers are charged based on the resources allotted to container instances, regardless of usage.

- Scalability: Serverless scales on demand, whereas container scalability is governed via orchestration systems.

- Cold Start Performance: Serverless services may face slowness during first execution (cold start), whereas containers are always operational, reducing cold start delays but incurring ongoing costs.

- Maintenance: Serverless technology allows the cloud provider to administer the infrastructure, minimizing the need for maintenance duties. Container environments, however, necessitate constant administration of the container orchestration platform.

- Deployment and Updates: A serverless function can be quickly deployed and changed, making them perfect for fast iterations. Container based application deployment times are typically short, although they can vary depending on the container’s complexity.

- Testing: Serverless application functionality can be challenging to simulate locally. Thus, testing is typically done in cloud settings. Containers are portable and may be operated anywhere, simplifying local development and testing.

- Portability: Containers are naturally portable, ideal for hybrid and multi-cloud deployments. Serverless solutions are more provider-specific, which could lead to vendor lock-in.

- Development Environments & Language Support: Many serverless platform options limit the languages and runtime versions available. Containers allow any language and runtime, as long as it can be containerized.

Serverless vs Container Use Cases

Understanding when to use serverless architecture or containers is critical to match your development strategy with your project’s scalability and operational efficiency requirements. Both technologies are designed for certain use cases and have specific benefits that can substantially impact your project’s success. Let’s look at the instances in which each technology thrives.

Use Cases for Serverless Architecture

Event-Driven Applications: Serverless architecture works well in contexts where apps respond to events. This paradigm is appropriate for tasks like processing file uploads, managing webhooks, and responding to user interactions on a website. The event-driven structure of serverless services enables efficient, on-demand scaling, ensuring resources are only used when needed and lowering idle costs.

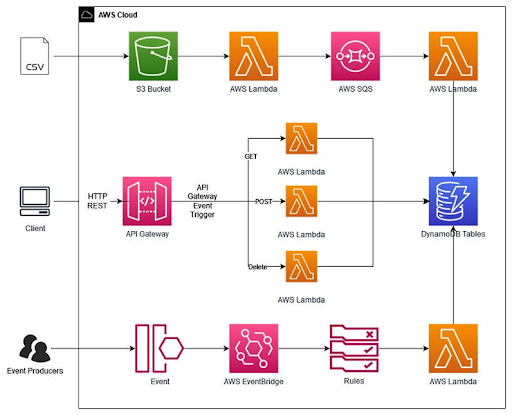

Below is an example of an AWS Serverless event-driven architecture.

Short-Lived Tasks: Serverless is ideal for tasks that require immediate but limited computational resources. Whether processing photos, conducting batch processes, or managing requests that don’t need a long-running server, serverless functions may swiftly scale up to handle the traffic and then scale down, reducing resource usage and cost.

Real-time image processing is an excellent example of a serverless operation with a short lifecycle. When a user uploads a picture, a serverless function is invoked to resize, compress, and watermark it instantly. This procedure scales automatically based on the number of uploads, ensuring efficient operation and lowering expenses by shutting off immediately when the activity is completed.

Cost-effective Development: The serverless architecture has the potential to significantly assist startups and projects with changeable or unpredictable traffic patterns. Serverless architectures can provide a cost-effective solution to intermittent-traffic projects by charging only the compute time needed, eliminating the costs associated with idle server resources.

Use Cases for Containers

Complex Applications: Containers provide exceptional flexibility and control, making them suitable for complex systems, particularly those built on a microservices architecture. By isolating each component in its own container, developers may manage, update, and scale sections of the program individually, improving the application’s robustness and scalability.

A microservices-based e-commerce platform is one example of a complicated program well-suited to containers. This platform is made up of multiple distinct services, including product cataloging, order administration, payment processing, and user authentication. Each service operates in its own container, which ensures isolation, scalability, and fast deployment.

Containers make it easier to integrate and manage these diverse services, making upgrades and scaling more effective while maintaining the overall stability of the platform.

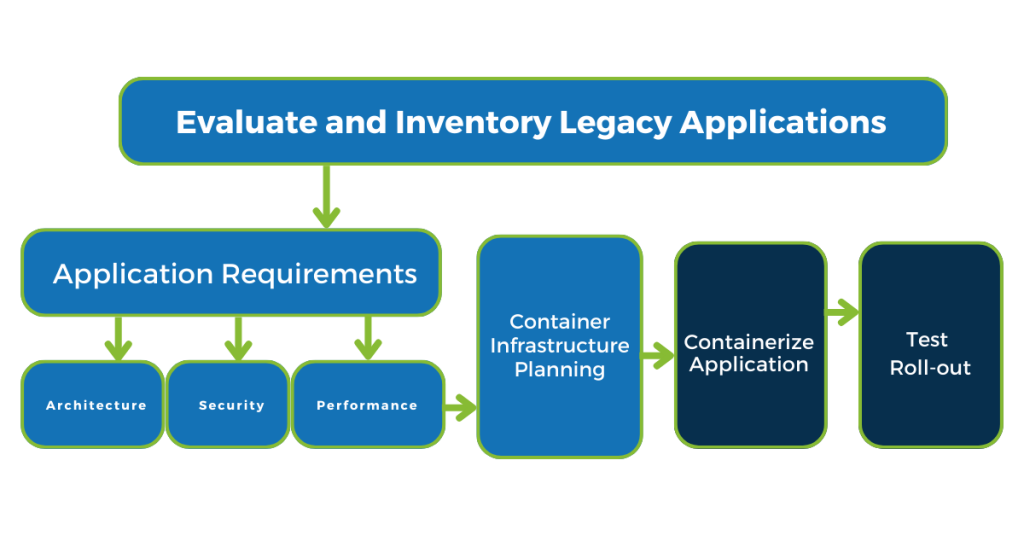

Legacy Systems: Many firms struggle to modernize legacy software without completely redesigning them. Containers offer a solution by enclosing these apps and their dependencies within containers. This encapsulation makes deployment and management easier across several contexts, bringing fresh life to aging systems without requiring extensive reconstruction.

Resource-heavy workloads: Containers are ideal for applications that require specialized software versions or intensive computing resources. Unlike serverless, which may limit runtime environments and execution times, containers allow developers complete control over the application environment and resources, guaranteeing that performance-critical applications execute efficiently and consistently.

By carefully analyzing these use scenarios, developers and organizations may make educated decisions about when to adopt serverless architecture versus containers. Each technology has unique benefits that can considerably improve development productivity, scalability, and cost-effectiveness when combined with project needs.

How Should Developers Choose Between the two?

Choosing between serverless and containers is a strategic decision that will substantially impact your project’s efficiency, scalability, and cost. This selection should be based on an in-depth review of your project’s requirements and limitations.

Long-running Processes vs. Event-driven Architecture

If your application comprises long-running processes or requires a persistent state, containers may be a better choice due to their ability to support stateful applications. On the other hand, serverless architecture works well for event-driven systems in which specific events trigger functionalities. This architecture is excellent for applications such as processing data in response to user inputs or running automated operations independently.

Expertise in Kubernetes vs. Serverless Simplicity

Managing a Kubernetes cluster for container orchestration necessitates a certain degree of skill and resources, which may be difficult for smaller teams or startups. Serverless, which provides a more straightforward method by abstracting infrastructure administration, may be desirable to teams with little operational capability. However, the decision here also depends on the team’s desire to invest in learning and growth for future benefits.

Predictability vs. Pay-as-you-go

Containers provide a more predictable pricing model, particularly for applications with consistent traffic, because resources may be distributed and optimized according to projected loads. With its pay-as-you-go pricing model, serverless can potentially reduce costs for applications with variable traffic by eliminating the cost of idle resources. To find the most cost-effective solution, undertake a complete cost analysis based on your application’s traffic patterns and resource use.

Flexibility and Portability vs. Provider Specificity

Containers, especially when managed using Kubernetes, offer greater flexibility and portability across many cloud providers or on-premise environments, lowering the risk of vendor lock-in. Although serverless systems allow rapid development and deployment, they are more provider-specific, limiting flexibility and increasing reliance on a single source. Assessing the value of vendor independence for your project will assist you in selecting the appropriate architecture.

Scalability and Maintenance

Both serverless and containers provide scalability; however, the methods and administration of scaling differ. Serverless automatically scales with demand, eliminating the need for manual intervention. However, it may provide difficulties in monitoring and troubleshooting. Containers demand a more hands-on approach to scaling and maintenance and offer greater environmental control and scalability. Evaluating your team’s long-term scalability requirements and maintenance skills is critical.

Cloud Security vs. Self-Managed Security

Serverless applications and container based applications differ in their security models and considerations. Serverless architecture abstracts the underlying infrastructure, allowing developers to focus solely on application code. In serverless, security responsibilities, such as patching and server management, are offloaded to the cloud provider. However, the challenge lies in trusting the provider’s security measures and ensuring proper configuration of functions and permissions. On the other hand, container-based applications, like those using Docker, encapsulate an application and its dependencies, providing consistency across different environments. Container security involves securing the container images, orchestrator (e.g., Kubernetes), and the underlying host. Developers need to address vulnerabilities in both the container images and runtime, implement network segmentation, and manage access controls. While both architectures have their security challenges, understanding and addressing these specific concerns is crucial for maintaining the integrity and confidentiality of applications in either environment.

Summary

Understanding the differences between serverless computing and containers is critical for developers and businesses seeking to make informed decisions about their cloud native strategy. You can pick the best technology for your project by considering its specific requirements, such as scalability, pricing, and operational complexity.

As the cloud computing landscape evolves, remaining knowledgeable and flexible to new technologies will keep your projects current and efficient.

For projects requiring specialized expertise in serverless computing, containers, or any aspect of software development, ParallelStaff offers a comprehensive suite of nearshore software development solutions. Our team of experienced professionals is equipped to support your software development needs, providing the flexibility and expertise to help you navigate the choice between serverless and containerized architectures.

Key Services:

- Custom Software Development

- Cloud Native Application Development

- DevOps and Infrastructure Management

Benefits of Partnering with ParallelStaff:

- Access to a vast pool of talent

- Cost-effective development solutions

- Flexible engagement models

Whether you’re exploring serverless architecture, containerized applications, or looking to optimize your current cloud native strategy, ParallelStaff can enhance your project’s success.

Ready to accelerate your software development journey? Book a call with us today and explore how we can support your project’s unique requirements.

- Concurrency in Java: Essential Guide to Parallel Programming - September 20, 2024

- Rust vs C++: Speed Benchmarks and Performance Analysis - September 20, 2024

- Mastering React Server-Side Rendering: Best Practices and Tips - September 19, 2024